Instability of LIME Explanations

Giorgio Visani

How to Deal with it? Behold the stability indices

In this article, I’d like to go very specific on the LIME framework for explaining machine learning predictions. I already covered the description of the method in this article, in which I also gave the intuition and explained its strengths and weaknesses (have a look at it if you didn’t yet). Herein, I will talk about a very specific LIME issue, namely the instability of its explanations, and I will provide a recipe to spot and take care of it.

LIME Instability

What do we mean by Instability?

Instability of the explanations: if you run LIME on a specific individual and repeat the method several times, with the same parameters and settings, you may end up with totally different explanations.

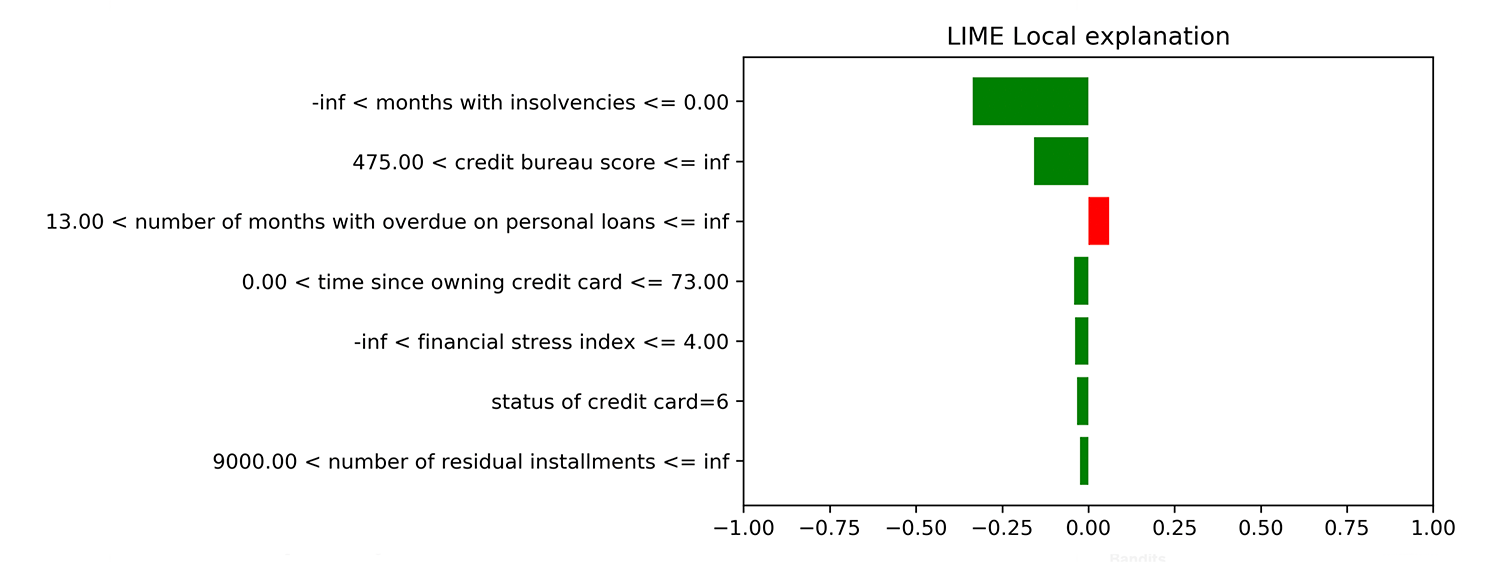

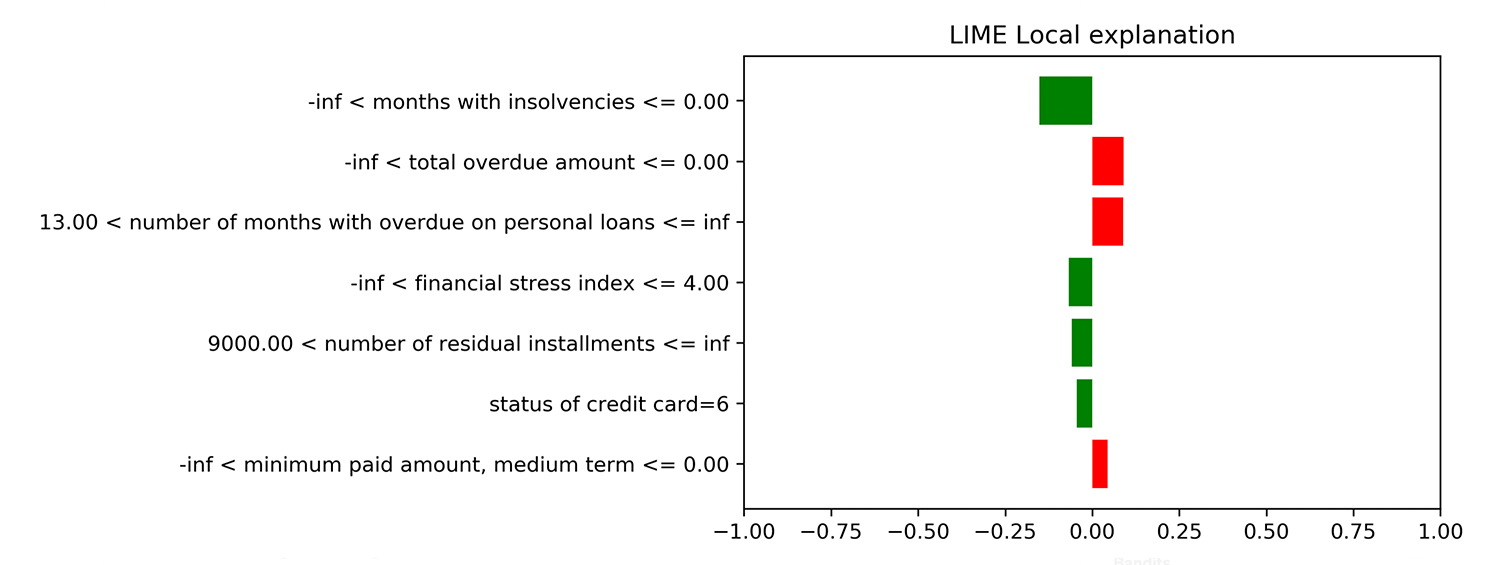

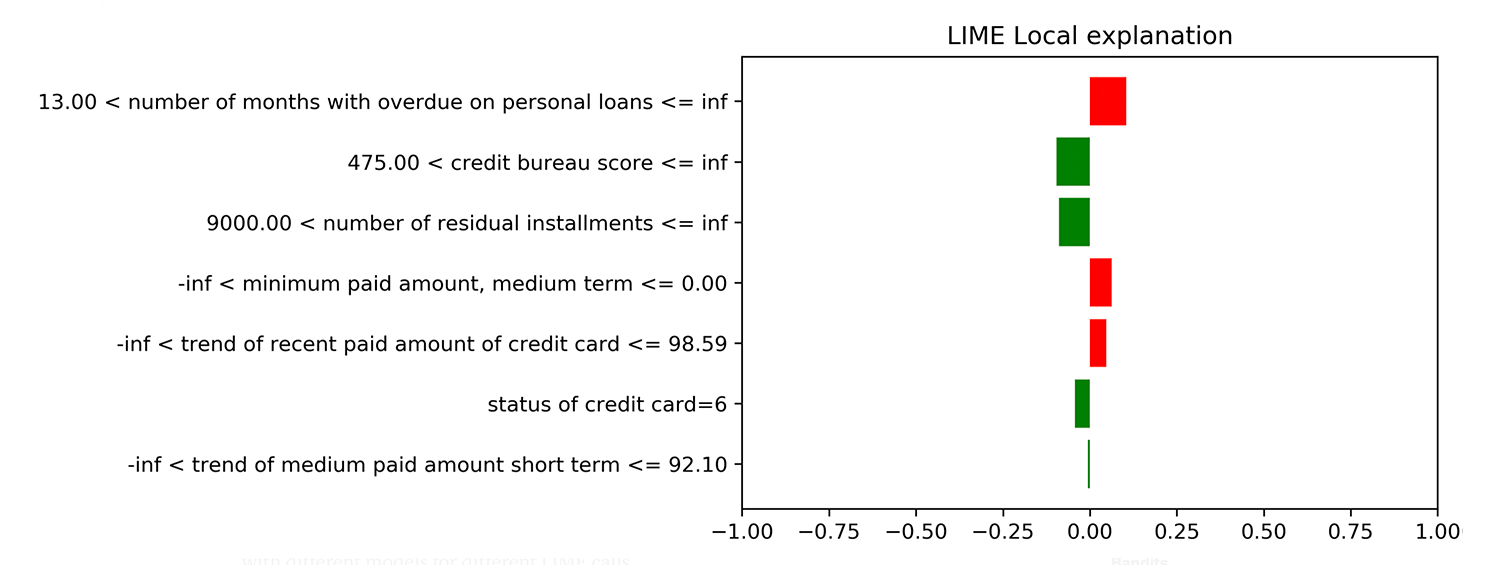

Three explanations regarding the same individual have been generated using LIME, with fixed parameters. We can see how different the explanations are, this is due to the Instability. Source here

Why does LIME suffer from Instability?

Because of the Generation Step. Remember that LIME generates x values all over the ℝᵖ space of the X variables of the dataset (explained here).

The points are generated at random, so each call to LIME creates a different dataset. Since the dataset is used to train the LIME Linear Model, we may end up with different models for different LIME calls.

Trust Issue caused by the Instability

Instability is very harmful to LIME.

To convince you about this, I’ll tell you a short anecdote:

Consider a bank using a ML model to decide on granting loans to the customers. The ML model is trained to predict whether the person will repay the loan or if she’s gonna default. Now, this is an extremely important decision, so the bank wants to understand well how its model works and decides to check why the ML denied credit to a particular guy.

The bank uses LIME three times in a row on the same guy and the explanations are completely different…

Now that we understood the problem and its consequences, let’s analyze how to solve it.

The first thing we may wonder about is:

Why don’t we get rid of the Generation Step, since it is the cause of Instability?

This is exactly what researchers have tried to do in this paper [2]. Their idea has been to use only the individuals in the training dataset to build LIME Linear Model, without generating new points inside LIME.

They consider the xᵢ values used for training and predict the ŷᵢ through the ML model, then give a weight to each unit based on the distance from the individual to be explained (using Gaussian Kernel).

In this way, LIME’s starting dataset stays the same for each call.

However, they did not consider an important drawback:

We may have regions with very few training points and our linear approximation will be very rough over there!

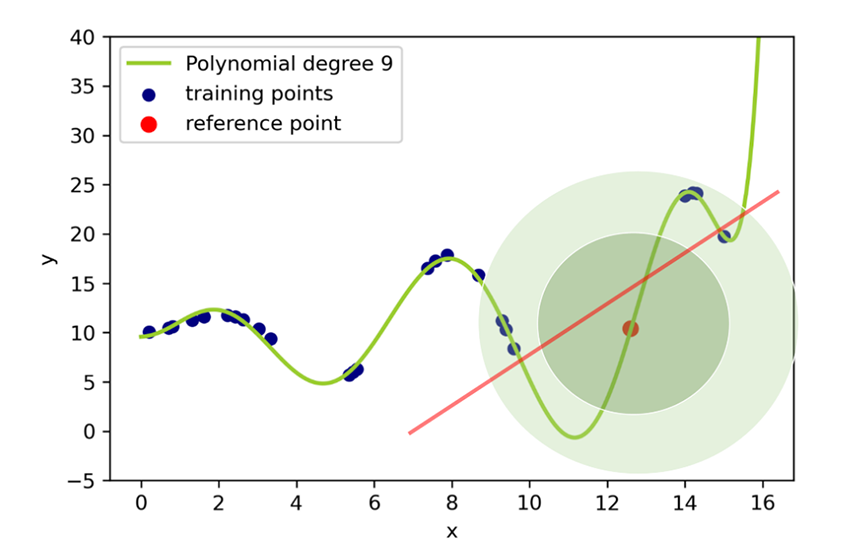

As an example consider the picture below:

The ML function is very wiggly, and we are considering a reference point (red dot) that is in a region quite far away from the other training points.

If we use a too small kernel width, we end up considering only the red dot. In this case, LIME would fail, because we need at least 2 points to draw a line (there are infinite lines passing through a single point; LIME is not able to choose the correct one).

If we enlarge the local region, we end up considering quite distant points and the resulting LIME line is completely unable to represent the local curvature of the ML model!

A toy example of an ML function (green curve) and LIME explanation (red line) built using just the training points. In sparsely populated regions of the function, LIME cannot work well without generating new points. Picture by Giorgio Visani.

Maybe you’re thinking, “Well this model is very bad! It is in a complete overfit state,” and that’s definitely true. But you must consider we use LIME exactly to understand how the ML model behaves.

If we do not generate new points, LIME may be unable to catch such problems in similar situations.

Moreover, any dataset presents some regions in which the training data coverage is not high (usually in the extrema of variables’ range). If we are interested in understanding the ML predictions also in those regions, we should use a tool that allows us to inspect the curve (generating more points) as in the LIME generation step.

Basically, the sampling step helps us having good coverage of the ML function. This is always helpful! The more points we generate, the more accurate is the LIME dataset in reproducing all the wiggly parts of the f(x) function.

So the right solution is to generate points using the random generation step in LIME. The stability is achieved if we have good coverage of the function f(x), around our reference point. In this way, we will be able to retrieve the proper tangent for each different LIME call.

STABILITY INDICES

The concepts, ideas, and pictures you find in this section, as well as the use case in the following one, are taken from our paper Statistical stability indices for LIME: obtaining reliable explanations for Machine Learning models [1], published in 2020.

LIME explanation consists of a Linear Model, which selects only the most important variables using Feature Selection Techniques (Lasso or Stepwise Selection). Each variable considered in the model has an associated coefficient. Therefore unstable LIME explanations may have both different variables and different coefficients.

We proposed to test the Stability of LIME explanations using a pair of indices: VSI (Variables Stability Index) and CSI (Coefficients Stability Index).

The indices aim to repeat LIME n times, on the same individual with equal settings, and compare the obtained Linear explanations.

We consider that two Linear Models are the same if they have the same variables and for each variable very similar coefficients.

Both the Indices range from 0 to 100, the higher the more similar are the n LIME explanations. Each indicator checks for a different concept of stability, therefore it is important to have large values for both of them. When this happens, we are guaranteed that our LIME explanation is stable, since we achieved good coverage of f(x) in the closeness of the reference individual.

VSI (Variables Stability Index)

It checks whether the variables are the same among the n LIME Linear Models. Basically, it looks at the stability of the feature selection process: we hope the selected features are more or less the same, namely the selection process should be consistent.

CSI (Coefficients Stability Index)

It compares the coefficients associated with each variable, across the different LIME models.

The index considers each variable separately (it is meaningful to compare coefficients related to the same feature).

For a single variable, CSI takes the coefficients in the n LIME explanations and builds confidence intervals for each one (we need the intervals to take care of small changes in the coefficients, caused by the inherent statistical error existing in all the models).

The intervals are compared and we obtain a value of coefficients’ concordance for the variable: when the confidence intervals overlap coefficients are considered equal; while disjoint intervals denote that the two coefficients’ ranges are completely different, hence the coefficients cannot have the same value.

The variable’s concordance value is averaged among all the features, obtaining the general CSI index.

Use Case and Application

Now I’ll show you an example of the indices’ usefulness.

Consider a Gradient Boosting model able to distinguish between good and bad payers (banks usually want to know what is the probability to be paid back by clients who asked for loans and ML models try to understand whether the clients will return the lent money).

Gradient Boosting is known to be a black-box model, so we must use LIME to understand why a particular individual (Unit 35) has been classified as a good payer.

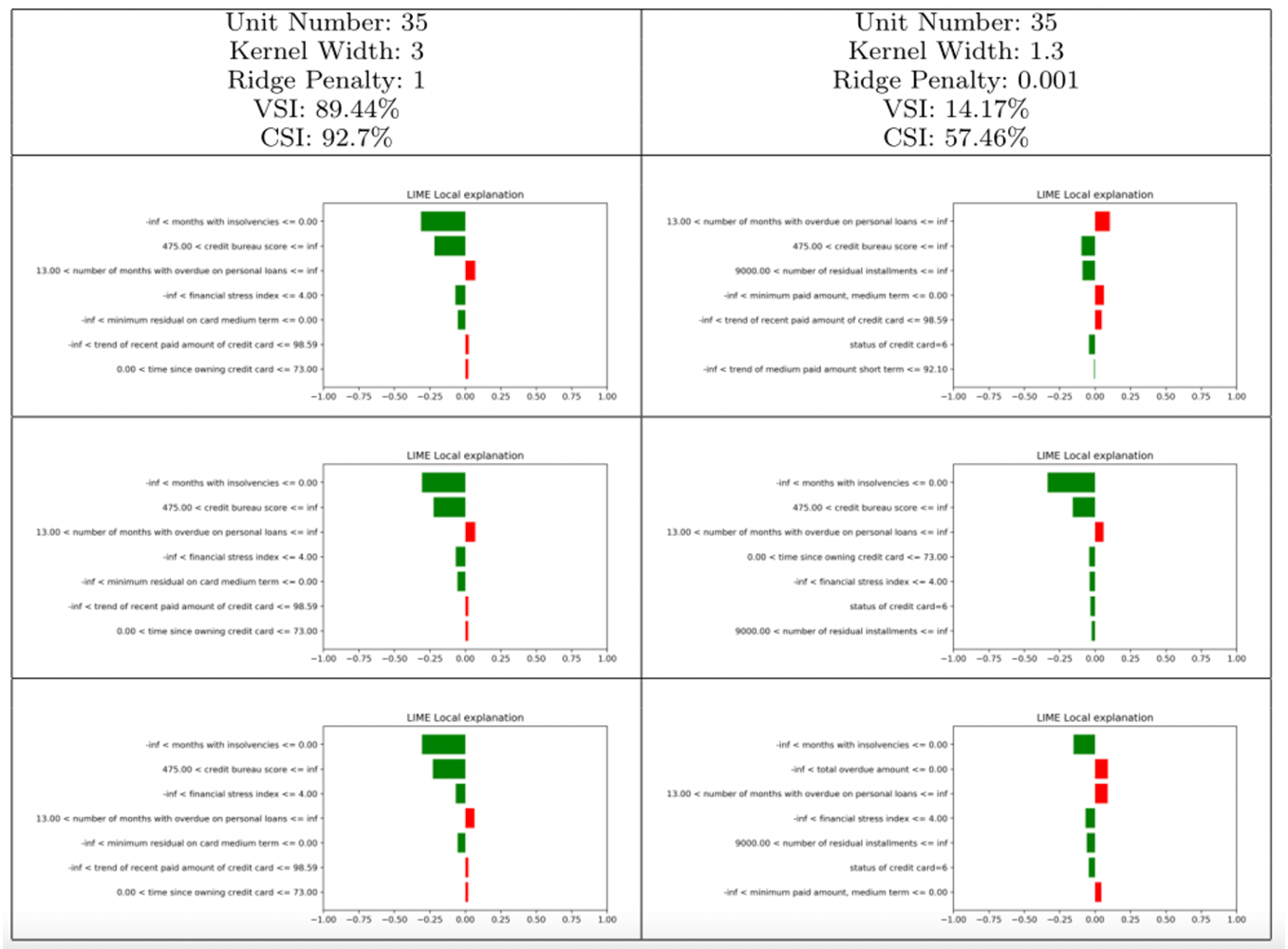

In the following Table, you can see how a good choice of the LIME’s parameters brings reliable and stable explanations, while the ML prediction for the same guy is totally unstable when bad parameters are chosen.

The Stability Indices help you in spotting this instability (in fact, in the right part we have very different LIME explanations in the graphs, and the Indices are quite low!)

LIME explanation is very simple to understand: summing up the bars’ values and the intercept, we obtain the ML prediction of the default probability for the concerned individual (Unit 35).

The bars’ length highlights the specific contribution of each variable: the green ones push the model towards “good payer” prediction, whereas the red ones to “bad payer”.

TO RECAP

In this article, we tackle the big issue of instability for LIME explanations, which undermines the trust in ML methods. I show this from a mathematical standpoint, as well as using real case applications.

I argue that often practitioners do not pay attention to the instability issue, especially because there were no tools to control for it.

I introduce the pair of stability indices for LIME, which can help practitioners to spot such problem. I encourage everyone using LIME to provide indices values to ensure the explanations are reliable.

This is the first step towards trustworthy LIME explanations for Machine Learning models.

[1] Visani, G., Bagli, et al., 2020. Statistical stability indices for LIME: obtaining reliable explanations for Machine Learning models, arXiv preprint

[2] Zafar, M.R., Khan, N.M., 2019. DLIME: A deterministic local interpretable model-agnostic explanations approach for computer-aided diagnosis systems, arXiv preprint